Google just made a big move in the AI infrastructure arms race, promoting Amin Vahdat to chief AI infrastructure technologist, a newly created position that reports directly to CEO Sundar Pichai, according to the first memo. Reported by Semaphore It was later confirmed by TechCrunch. It’s a sign of how important this work is as Google pursues it 93 billion dollars in capital expenditures by the end of 2025 – a number that parent company Alphabet expects to be much larger next year.

Vahdat is not new to the game. The computer scientist, who has a PhD from the University of California at Berkeley and started as a research intern at Xerox PARC in the early 1990s, has been quietly building Google’s AI backbone for the past 15 years. Before joining Google in 2010 as an engineering fellow and vice president, he was an associate professor at Duke University and later became a professor and chair of SAIC at the University of California, San Diego. His academic credentials are formidable – with what seems to be the case 395 papers published His research has always focused on making computers work more efficiently at scale.

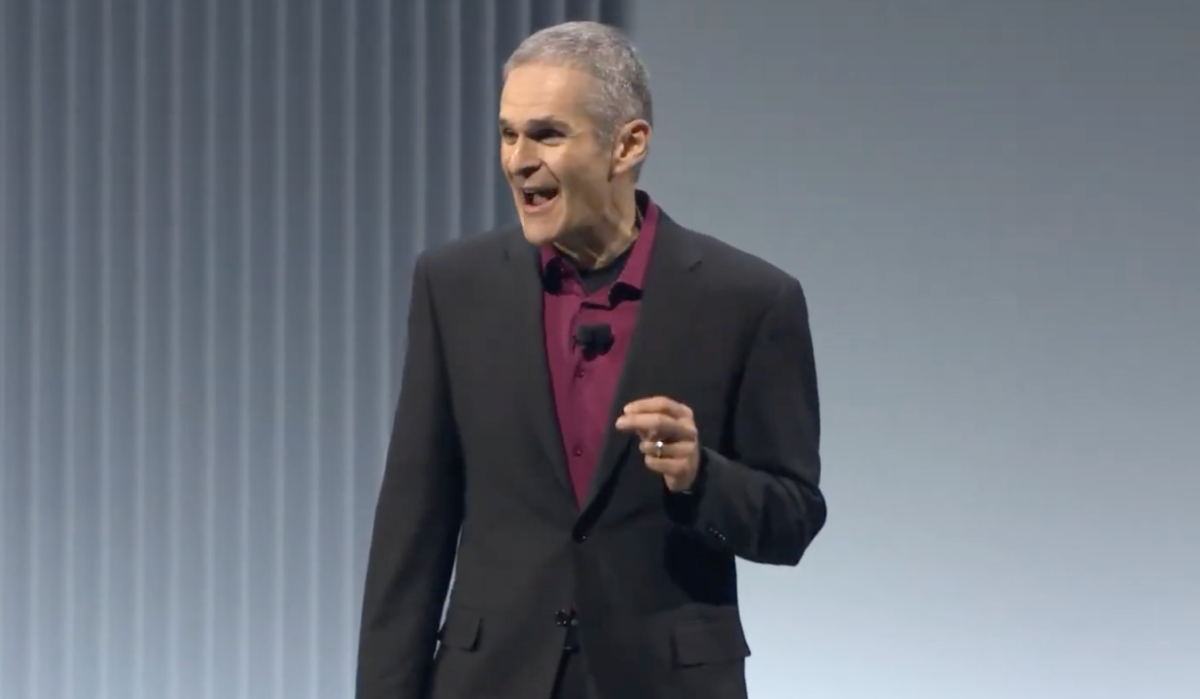

Vahdat already maintains a high-profile position at Google. Just eight months ago, at Google Cloud Next, he took the stage to unveil the company’s seventh-generation TPU, called Ironwood, in his role as vice president and general manager of ML, Systems and Cloud AI. He said the specifications he showed off at the event were also impressive: more than 9,000 chips in each pod providing 42.5 exaflops of computing — more than 24 times the power of the world’s No. 1 supercomputer at the time. “The demand for AI computing has increased by a factor of 100 million in just eight years,” he told the audience.

Behind the scenes, as Semaphore noted, Vahdat has been coordinating the not-so-glamorous but essential work that keeps Google competitive, including the dedicated TPU chips for AI training and inference that give Google an advantage over competitors like OpenAI, as well as the Jupiter Network, the ultra-fast internal network that allows all of its servers to talk to each other and transfer massive amounts of data. (in a Blog post Late last year, Vahdat said Jupiter’s speed was now at 13 petabit per second, explaining that’s enough bandwidth to theoretically support a video call for all of Earth’s 8 billion people simultaneously.)

Vahdat was also deeply involved in the ongoing development of the Borg software system, Google’s cluster management system that serves as the brain that coordinates all the work that occurs across its data centers. He said he oversaw the development of Axion, the first general-purpose CPUs based on Arm technology and designed for data centers, which the company built. It was unveiled last year And it continues to build.

In short, Vahdat is a key component of Google’s AI story.

In fact, in a market where top AI talent requires astronomical compensation and ongoing hiring, Google’s decision to promote Vahdat to CEO may also be about employee retention. When you spend 15 years building someone into a core focus of your AI strategy, you make sure they stay.

TechCrunch event

San Francisco

|

October 13-15, 2026